DIY Marketing Reseach Guide

Empowering the early stage & continuous learner of self-service marketing research.

Let’s begin by outlining our assumptions. This will help us align our understanding, facilitate your success, and identify realistic moments to seek assistance.

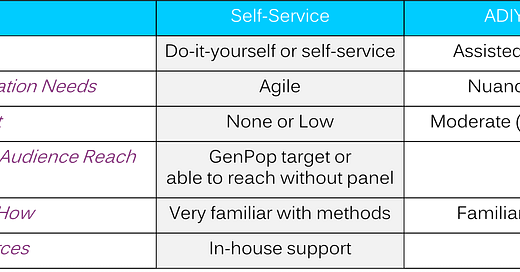

In the table below are some useful guidelines across the tiers of marketing research mapped to your information needs, budget, target audience, expertise, and available resourcing.

As you can deduce, we assume your data needs are simple or flexible, allowing for quick data collection on a limited budget. Success improves if you have access to the sample for free or if the target audience isn't too specific. Familiarity with survey design, programming, fielding, and analysis will also boost success as well having good in-house support. However, as it relates to resources, the emergence of deployed and developing AI features will continue to address resourcing needs.

First, let's assume you will design a straightforward survey that includes five screening questions to target the appropriate audience. Following that, you will ask ten primary questions before concluding with demographic data collection.

Screener Design Considerations

Here are a few best practices to get you up and running:

To create effective screener questions for a survey, it's crucial to implement a strategic method that filters out unqualified respondents while retaining those who match your target audience.

Clearly define the characteristics of the participants you wish to include in your study, which could encompass demographic details, experience levels, or particular behaviors and preferences.

Start with broader questions to capture a diverse array of respondents, then narrow down with specific questions to pinpoint your target audience.

Avoid yes/no questions as they can produce less reliable data. Instead, opt for multiple-choice questions with mutually exclusive options to obtain more detailed responses.

Ensure that questions are unbiased and do not imply a preferred answer, using neutral language and balanced answer choices.

Use simple, jargon-free language. Questions should be straightforward to minimize confusion and ensure accurate responses.

Arrange your questions so that those critical to determining eligibility appear first. This helps disqualify ineligible participants early, conserving time and resources.

Keep the screener brief to maintain participant engagement. A lengthy screener may cause drop-offs and frustration if participants are disqualified after spending considerable time.

Design a few red herring questions to identify and exclude respondents who might not be truthful about their qualifications.

See this PEW article for a few more considerations:

Writing Survey Questions | Pew Research Center

Main Survey Design Considerations

Crafting the main section of an online survey requires several essential steps to ensure its effectiveness, engagement, and data collection capabilities.

Establish clear objectives for your survey. Determine what information you want to collect and understand how it will be utilized. This will help you choose the appropriate types and structures of questions.

Incorporate a combination of question types that align with your goals. Common question formats include:

Multiple-Choice Questions: Effective for quantitative responses and straightforward analysis.

Rating Scale Questions: Suitable for evaluating the intensity of opinions or levels of satisfaction.

Likert Scale Questions: Useful for assessing attitudes across a range from "strongly agree" to "strongly disagree".

Open-Ended Questions: Offer respondents the opportunity to provide detailed qualitative feedback.

Start with easy and engaging questions to capture respondent interest and ease them into the survey.

Arrange questions from general to specific, starting broad and then narrowing down.

Place any personal or sensitive questions towards the end to minimize early drop-offs.

Keep the survey brief to avoid respondent fatigue and maintain high completion rates.

Avoid jargon and ensure that your questions are easily understood.

Use a uniform format throughout the survey to reduce confusion.

Ensure each section or question type has clear instructions to guide respondents.

Adhere to Ethical Guidelines: Ensure ethical practices such as obtaining informed consent and maintaining respondent anonymity where necessary.

Optional: Conduct a test run to identify issues with question clarity or survey flow and use the feedback to make adjustments.

And for those wanting more information, here’s a quick methods 101 primer from the PEW research center:

Collecting Demographic Data

For demographic data collection, see these guidelines to align with best practices:

And see here for additional suggestions and to get going quickly:

Survey Platforms for Data Collection

If you have Office 365 or Google Workspace as well as access to the audience of interest, Microsoft Forms or Google Forms may work for your data collection needs.

Otherwise, for a dedicated survey platform you have many choices including QuestionPro who offers a free account with a useful set of features (quick summarization below).

If you decide to use QuestionPro, see this video (from 10/12/2022) which will cover How to create a survey in QuestionPro (including touching on using templates). While they do offer an AI survey development assistant feature, you’ll have to upgrade for access.

Quality Assurance, Programming, & Fielding

Now let’s assume you’re on your way with a great survey design and are ready to move into the core work of the survey - making sure the design is right, verifying the right data will be collected, the design is properly programmed, and technical considerations for sample management and fielding have been addressed.

Before you proceed, remember that the key to an effective survey lies in planning how you will use the data. The worst-case scenario would be realizing that you have collected incorrect or insufficient information that doesn’t support the story and insights.

The AI productivity enhancements

If you follow #AguirreAnalytics on LinkedIn, you may have seen this post, back in early July, about AI and its expected impact on marketing research.

There were two main conclusions:

…AI would improve productivity & data quality and

…what was most relevant was “investing in the right talent and embracing an incremental and iterative approach.”

This means as you embrace DIY research including with an AI assistant, you’ll get increasingly more productive including across the tasks of writing, analysis, code development, and knowledge generation.

So that is a real advantage.

What will be more challenging is aligning design elements to the area of investigation and then developing the right insights. And the degree of difficulty will depend on which area of investigation you’re focused on.

Typically, in your research journey you’ll be asking questions about:

Brands,

Innovations,

Markets,

Competitors, or

Price

So roughly five areas of investigation. And across these areas, basic DIY studies are best suited for learning about innovation (e.g., new products or services including an evaluation of features, ability to address unmet needs, reduce current pain points, what and how to optimize, etc.). However, savvy and advanced DIY researchers will be able to cover more areas.

One standard design element that might be useful to your innovation studies is a concept index score.

Concept Index Score

This design uses an attribute battery to assess the likelihood of your target audience purchasing your product or service compared to other options and presents it on a scorecard with other attributes.

A core part of the scorecard is an index that is weighting by all attributes but more heavily weighted by purchase intent to align with what should matter most in your decision making. The index is the mechanism to rank order product alternatives and be the proxy to learn how well your concept will do in the market.

The results are evaluated in a table like this:

To make the interpretation intuitive all scores are shown as percents ranging from 0 to 100. The scores come from the attribute battery design and reflect the share selecting a top box response from a Likert scale. Using top box is the more robust way to build score cards.

This should bring to life how design, area of investigation, and analytic approach come together to build a useful insight story.

If you found the concept index score useful, feel free to reach out so we evaluate your innovations together (link).

Follow us to join the conversation, we’d love to learn what more you want to learn.

© 2024 Aguirre Analytics Consulting. All rights reserved.